In this PySpark article we will see how to mask card number in PySpark DataFrame, The meaning of mask is to hide all 12 digits of a card number and display the last four digits of the card number. This is one of the most important questions, Especially, If you are going for a Data Engineer interview or PySpark or Python Developer position.

We will use some PySpark functions to solve this question, To solve this question first of all we have a PySpark DataFrame along with some dummry records.

I have created a Sample PySpark DataFrame with some data.

Headings of Contents

PySpark Sample DataFrame

To mask the card number, I have created a sample PySpark DataFrame with some dummy records as you can see below.

from pyspark.sql import SparkSession

# list of tuples

data = [

("1", "Vishvajit", "Rao", "5647 7463 7678 8625"),

("2", "Harsh", "Goal", "7987 7867 7862 7353"),

("3", "Pankaj", "Kumar", "8637 3764 4987 7864"),

("4", "Pranjal", "Rao", "0984 0982 6456 7673"),

("5", "Ritika", "Kumari", "0948 3644 2637 3846"),

("6", "Diyanshu", "Saini", "9874 3678 4655 3678"),

]

# columns

column_names = ["id", "first_name", "last_name", "credit_care"]

# creating spark session

spark = (

SparkSession.builder.master("local[*]")

.appName("www.programmingfunda.com")

.getOrCreate()

)

# creating DataFrame

df = spark.createDataFrame(data=data, schema=column_names)

df.show()

After executing the above PySpark code, The Output DataFrame will be like this.

+---+----------+---------+-------------------+

| id|first_name|last_name| credit_care|

+---+----------+---------+-------------------+

| 1| Vishvajit| Rao|5647 7463 7678 8625|

| 2| Harsh| Goal|7987 7867 7862 7353|

| 3| Pankaj| Kumar|8637 3764 4987 7864|

| 4| Pranjal| Rao|0984 0982 6456 7673|

| 5| Ritika| Kumari|0948 3644 2637 3846|

| 6| Diyanshu| Saini|9874 3678 4655 3678|

+---+----------+---------+-------------------+Mask Card Number in PySpark DataFrame

Now, We will see process of the masking the card number. We will see multiple ways to mask card numbers in PySpark DataFrame.

Using UDF ( User Defined Function )

UDF stands for User Defined Function, UDF in PySpark allows us to write our function and apply it on PySpark DataFrame.

Sometimes we want to write our own Python function to solve a specific problem then you can write a UDF and apply it on DataFrame.

There is no built-in function in PySpark that can mask the credit card number and display only four digits of the credit card number.

That’s why we have to write our function.

Let’s see how can we do that using UDF.

Example: Mask Card Numbers in PySpark DataFrame using UDF

from pyspark.sql import SparkSession

from pyspark.sql.functions import udf, StringType

# Function to mask credit card number

def card_masking(card_number):

return '***************' + card_number[-4:]

# register the function using udf()

udf_function = udf(lambda x: card_masking(x), StringType())

# list of tuples

data = [

("1", "Vishvajit", "Rao", "5647 7463 7678 8625"),

("2", "Harsh", "Goal", "7987 7867 7862 7353"),

("3", "Pankaj", "Kumar", "8637 3764 4987 7864"),

("4", "Pranjal", "Rao", "0984 0982 6456 7673"),

("5", "Ritika", "Kumari", "0948 3644 2637 3846"),

("6", "Diyanshu", "Saini", "9874 3678 4655 3678"),

]

# columns

column_names = ["id", "first_name", "last_name", "credit_card"]

# creating spark session

spark = (

SparkSession.builder.master("local[*]")

.appName("www.programmingfunda.com")

.getOrCreate()

)

# creating DataFrame

df = spark.createDataFrame(data=data, schema=column_names)

# df.show()

# applying udf

new_df = df.withColumn('masked_card_number', udf_function(df.credit_card))

new_df.show()

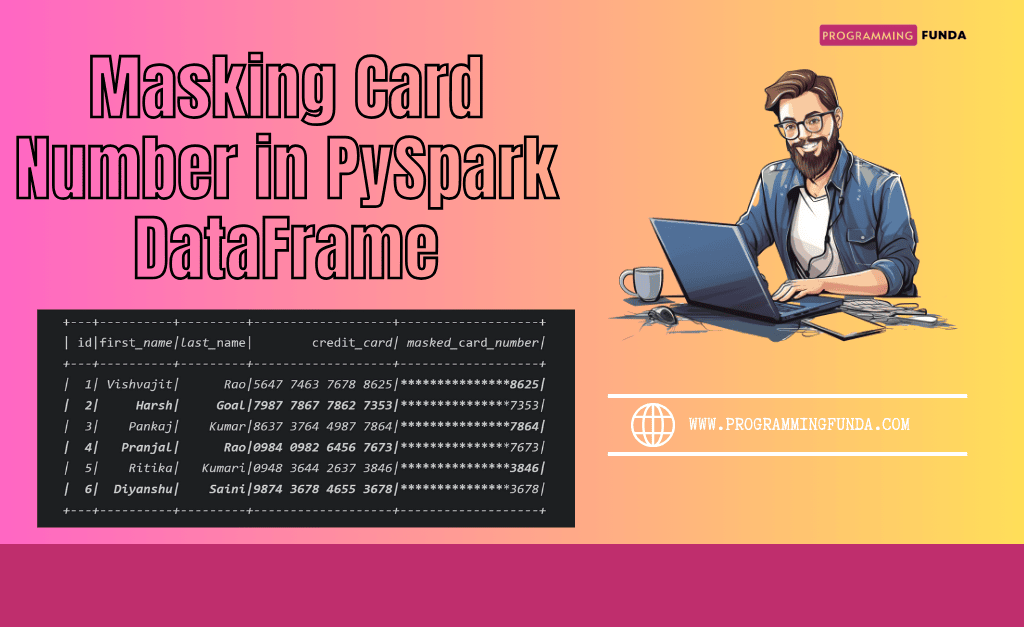

The final output will be:

+---+----------+---------+-------------------+-------------------+

| id|first_name|last_name| credit_card| masked_card_number|

+---+----------+---------+-------------------+-------------------+

| 1| Vishvajit| Rao|5647 7463 7678 8625|***************8625|

| 2| Harsh| Goal|7987 7867 7862 7353|***************7353|

| 3| Pankaj| Kumar|8637 3764 4987 7864|***************7864|

| 4| Pranjal| Rao|0984 0982 6456 7673|***************7673|

| 5| Ritika| Kumari|0948 3644 2637 3846|***************3846|

| 6| Diyanshu| Saini|9874 3678 4655 3678|***************3678|

+---+----------+---------+-------------------+-------------------+This is how you can mask card number In PySpark DataFrame with the help of the UDF.

Using regex_extract(), concat(), alias() and lit() Functions

These functions are the built-in functions in PySpark that can be used to solve this question.

Let’s see a brief introduction to these functions.

regex_extract():- The regex_extract() function is used to extract the specific part of the string that fulfills the specified pattern in the second parameter. Remember, It always returns a specific group.

concat():- The concat() function takes multiple column names as parameters and merges values of all the passed columns together. We can also pass static value inside this.

alias():- The alias() function is used to assign the new column name to the old column.

lit():- This function is used to create a new column with a literal value.

Note:- All these functions are defined inside pyspark.sql.functions module, That's you have to import all these functions from pyspark.sql.functions.

Now, Let’s move on to the example part where we will implement all these functions.

Mask Card Numbers in PySpark DataFrame using regex_extract(), concat(), alias() and lit()

from pyspark.sql import SparkSession

from pyspark.sql.functions import regexp_extract, concat, lit

# list of tuples

data = [

("1", "Vishvajit", "Rao", "5647 7463 7678 8625"),

("2", "Harsh", "Goal", "7987 7867 7862 7353"),

("3", "Pankaj", "Kumar", "8637 3764 4987 7864"),

("4", "Pranjal", "Rao", "0984 0982 6456 7673"),

("5", "Ritika", "Kumari", "0948 3644 2637 3846"),

("6", "Diyanshu", "Saini", "9874 3678 4655 3678"),

]

# columns

column_names = ["id", "first_name", "last_name", "credit_card"]

# creating spark session

spark = (

SparkSession.builder.master("local[*]")

.appName("www.programmingfunda.com")

.getOrCreate()

)

# creating DataFrame

df = spark.createDataFrame(data=data, schema=column_names)

new_df = df.withColumn('masked_card',

concat(lit("***************"), regexp_extract("credit_card", "\d+{4}$", 0))

)

new_df.show()

The final output will be:

+---+----------+---------+-------------------+-------------------+

| id|first_name|last_name| credit_card| masked_card|

+---+----------+---------+-------------------+-------------------+

| 1| Vishvajit| Rao|5647 7463 7678 8625|***************8625|

| 2| Harsh| Goal|7987 7867 7862 7353|***************7353|

| 3| Pankaj| Kumar|8637 3764 4987 7864|***************7864|

| 4| Pranjal| Rao|0984 0982 6456 7673|***************7673|

| 5| Ritika| Kumari|0948 3644 2637 3846|***************3846|

| 6| Diyanshu| Saini|9874 3678 4655 3678|***************3678|

+---+----------+---------+-------------------+-------------------+This is how you can hide the 12 digits of the card and display only the last four digits of the card number.

PySpark Useful Articles

- PySpark Normal Built-in Functions

- PySpark SQL DateTime Functions with Examples

- PySpark SQL String Functions with Examples

- Merge Two DataFrames in PySpark with Different Column Names

- How to Fill Null Values in PySpark DataFrame

- How to Drop Duplicate Rows from PySpark DataFrame

- PySpark DataFrame Tutorial for Beginners

- PySpark Column Class with Examples

- PySpark Sort Function with Examples

- PySpark col() Function with Examples

- How to read CSV files using PySpark

- How to Explode Multiple Columns in PySpark DataFrame

- How to Count Null and NaN Values in Each Column in PySpark DataFrame?

- Merge Two DataFrames in PySpark with Same Column Names

- How to Apply groupBy in Pyspark DataFrame

- How to Change DataType of Column in PySpark DataFrame

- Drop One or Multiple columns from PySpark DataFrame

- How to Convert PySpark DataFrame to JSON ( 3 Ways )

- How to Write PySpark DataFrame to CSV

- How to Convert PySpark DataFrame Column to List

- How to convert PySpark DataFrame to RDD

- How to convert PySpark Row To Dictionary

Conclusion

You can use only one to mask card number in PySpark DataFrame, Both solutions are useful. There is a high chance that this question might be asked in a Data Engineer, PySpark Developer, or Python Developer interview to test your logical skills.

If you found this article helpful, Please share and keep visiting for further interesting PySpark tutorials.

Thanks for visiting…..