Hi PySpark Developers, I how you are doing well. In today’s articles, we are going to learn all about how to fill null values in PySpark DataFrame with zero ( 0 ), constant value, string, empty string, etc.

PySpark provides two methods called fillna() and fill() that are always used to fill missing values in PySpark DataFrame in order to perform any kind of transformation and actions.

Handling missing values in PySpark DataFrame is one of the most common tasks by PySpark Developers, Data Engineers, Data Analysts, etc. Before processing the PySpark DataFrame we have to handle all those missing values or null values or none values in Pyspark DataFrame.

Headings of Contents

Why do we need to fill null values in PySpark DataFrame?

Being a PySpark Developer, Data Engineer, Data Analyst, etc when you load Dataset from different-difference source systems in order to extract some insights from that data for organizational decisions, Then it might be a high probability that the dataset contained some missing values, So it’s our responsibility to handle all those missing values and then start processing towards the new data. Here missing values means null values or None values. Before processing the Data, we have to fill all the missing values with some other values like zero, empty string, any constant value, etc.

Before using the PySpark fillna() and fill() method, let’s create a sample PySpark DataFrame along with some null values.

Create PySpark DataFrame From CSV

Here, I have prepared sample CSV files along with some null values. Now I am about to load that CSV file using PySpark.

sample_data.csv

First Name,Last Name,Gender,Country,Age,Date,Id

Dulce,Abril,Female,,32,2017-10-15,1562

Mara,,Female,Great Britain,,2016-08-16,1582

Philip,Gent,Male,,36,2015-05-21,2587

Kathleen,Hanner,Female,,25,,1876

Vishvajit,Rao,,India,,2023-04-24,1230

Harry,,Male,India,27,2024-05-23,5664

Pankaj,,Male,,30,2019-09-23,434

Harshita,Kumari,,USA,24,2018-06-20,352PySpark Code to load the above sample_data.csv file:

from pyspark.sql import SparkSession

# creating spark session

spark = SparkSession.builder.appName("testing").getOrCreate()

# creating PySpark DataFrame

dataframe = spark.read.option('header', 'true').csv('sample_data.csv')

# displaying

dataframe.show(truncate=False)

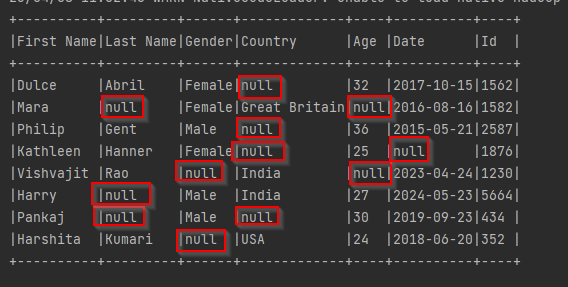

After executing the above code, The DataFrame will be like this.

+----------+---------+------+-------------+----+----------+----+

|First Name|Last Name|Gender|Country |Age |Date |Id |

+----------+---------+------+-------------+----+----------+----+

|Dulce |Abril |Female|null |32 |2017-10-15|1562|

|Mara |null |Female|Great Britain|null|2016-08-16|1582|

|Philip |Gent |Male |null |36 |2015-05-21|2587|

|Kathleen |Hanner |Female|null |25 |null |1876|

|Vishvajit |Rao |null |India |null|2023-04-24|1230|

|Harry |null |Male |India |27 |2024-05-23|5664|

|Pankaj |null |Male |null |30 |2019-09-23|434 |

|Harshita |Kumari |null |USA |24 |2018-06-20|352 |

+----------+---------+------+-------------+----+----------+----+As you can see in the above table, some null values exist. In fact, I have highlighted all those null values in red color in the below screenshot.

Let’s explain the above PySpark Code:

- Firstly, I have imported the SparkSession class from the pyspark.sql module.

- Second, I have created a spark session with the help of SparkSession.builder.appName(“testing”).getOrCreate().

- Third, I have used the read attribute and csv() method to load the sample_data.csv file.

- Fourth, I have displayed the PySpark DataFrame.

Now, It’s time to fill or replace all null values.

What is the PySpark DataFrame fillna() method

PySpark fillna() is a PySpark DataFrame method that was introduced in spark version 1.3.1. PySpark DataFrame fillna() method is used to replace the null values with other specified values. It accepts two parameter values and subsets.

- value:- It is a value that will come in place of null values. The Data Type of value can be string, dict, bool, int, and float.

- subset:- It is an optional parameter that represents the list of column names to consider. if it is not provided, then all the null values will be replaced in the PySpark DataFrame.

Let’s use fillna() method to fill null values in PySpark DataFrame, we will take the above created DataFrame to replace null values.

Example: Replace Null values with an empty string

For example, I want to replace all the null values with an empty string.

from pyspark.sql import SparkSession

# creating spark session

spark = SparkSession.builder.appName("testing").getOrCreate()

# creating PySpark DataFrame

dataframe = spark.read.option('header', 'true').csv('sample_data.csv')

# replace all the null values with empty string

dataframe = dataframe.fillna('')

# displaying

dataframe.show(truncate=False)

After replacing null values with empty strings the output will be.

+----------+---------+------+-------------+---+----------+----+

|First Name|Last Name|Gender|Country |Age|Date |Id |

+----------+---------+------+-------------+---+----------+----+

|Dulce |Abril |Female| |32 |2017-10-15|1562|

|Mara | |Female|Great Britain| |2016-08-16|1582|

|Philip |Gent |Male | |36 |2015-05-21|2587|

|Kathleen |Hanner |Female| |25 | |1876|

|Vishvajit |Rao | |India | |2023-04-24|1230|

|Harry | |Male |India |27 |2024-05-23|5664|

|Pankaj | |Male | |30 |2019-09-23|434 |

|Harshita |Kumari | |USA |24 |2018-06-20|352 |

+----------+---------+------+-------------+---+----------+----+Example: fill null values with 0 in PySpark DataFrame:

We can also replace null values with zero, Pass zero into fillna() method.

from pyspark.sql import SparkSession

# creating spark session

spark = SparkSession.builder.appName("testing").getOrCreate()

# creating PySpark DataFrame

dataframe = spark.read.option('header', 'true').csv('sample_data.csv')

dataframe.printSchema()

# replace all the null values with empty string

dataframe = dataframe.fillna('0')

# displaying

dataframe.show(truncate=False)

Output

+----------+---------+------+-------------+---+----------+----+

|First Name|Last Name|Gender|Country |Age|Date |Id |

+----------+---------+------+-------------+---+----------+----+

|Dulce |Abril |Female|0 |32 |2017-10-15|1562|

|Mara |0 |Female|Great Britain|0 |2016-08-16|1582|

|Philip |Gent |Male |0 |36 |2015-05-21|2587|

|Kathleen |Hanner |Female|0 |25 |0 |1876|

|Vishvajit |Rao |0 |India |0 |2023-04-24|1230|

|Harry |0 |Male |India |27 |2024-05-23|5664|

|Pankaj |0 |Male |0 |30 |2019-09-23|434 |

|Harshita |Kumari |0 |USA |24 |2018-06-20|352 |

+----------+---------+------+-------------+---+----------+----+So far we have seen how to fill all null values in PySpark DataFrame with an empty string and zero, In fact, you can pass anything in place of zero. But we can also replace null values in a particular column of PySpark DataFrame.

To fill particular columns’ null values in PySpark DataFrame, We have to pass all the column names and their values as Python Dictionary to value parameter to the fillna() method.

In The main data frame, I am about to fill 0 to the age column and 2023-04-10 to the Date column and the rest will be null itself.

from pyspark.sql import SparkSession

# creating spark session

spark = SparkSession.builder.appName("testing").getOrCreate()

# creating PySpark DataFrame

dataframe = spark.read.option('header', 'true').csv('sample_data.csv')

dataframe.printSchema()

# replace all the null values with empty string

dataframe = dataframe.fillna({"age": 0, "Date": '2023-04-10'})

# displaying

dataframe.show(truncate=False)

Output

+----------+---------+------+-------------+---+----------+----+

|First Name|Last Name|Gender|Country |Age|Date |Id |

+----------+---------+------+-------------+---+----------+----+

|Dulce |Abril |Female|null |32 |2017-10-15|1562|

|Mara |null |Female|Great Britain|0 |2016-08-16|1582|

|Philip |Gent |Male |null |36 |2015-05-21|2587|

|Kathleen |Hanner |Female|null |25 |2023-04-10|1876|

|Vishvajit |Rao |null |India |0 |2023-04-24|1230|

|Harry |null |Male |India |27 |2024-05-23|5664|

|Pankaj |null |Male |null |30 |2019-09-23|434 |

|Harshita |Kumari |null |USA |24 |2018-06-20|352 |

+----------+---------+------+-------------+---+----------+----+Even you can pass column names explicitly to the subset parameter in order to replace null values.

from pyspark.sql import SparkSession

# creating spark session

spark = SparkSession.builder.appName("testing").getOrCreate()

# creating PySpark DataFrame

dataframe = spark.read.option('header', 'true').csv('sample_data.csv')

dataframe.printSchema()

# replace all the null values with empty string

dataframe = dataframe.fillna('0', subset=['Last Name', 'Gender'])

# displaying

dataframe.show(truncate=False)

Output

+----------+---------+------+-------------+----+----------+----+

|First Name|Last Name|Gender|Country |Age |Date |Id |

+----------+---------+------+-------------+----+----------+----+

|Dulce |Abril |Female|null |32 |2017-10-15|1562|

|Mara |0 |Female|Great Britain|null|2016-08-16|1582|

|Philip |Gent |Male |null |36 |2015-05-21|2587|

|Kathleen |Hanner |Female|null |25 |null |1876|

|Vishvajit |Rao |0 |India |null|2023-04-24|1230|

|Harry |0 |Male |India |27 |2024-05-23|5664|

|Pankaj |0 |Male |null |30 |2019-09-23|434 |

|Harshita |Kumari |0 |USA |24 |2018-06-20|352 |

+----------+---------+------+-------------+----+----------+----+PySpark DataFrame fill() Method

The fill() is a method that is used to replace null values in PySpark DataFrame.PySpark DataFrame fill() and fillna() methods are aliases of each other. The parameter of the fill() method will be the same as fillna() method.

Example: Fill null values in PySpark DataFrame using fill() method

from pyspark.sql import SparkSession

# creating spark session

spark = SparkSession.builder.appName("testing").getOrCreate()

# creating PySpark DataFrame

dataframe = spark.read.option('header', 'true').csv('sample_data.csv')

# replace all the null values with empty string

dataframe = dataframe.na.fill('0')

# displaying

dataframe.show(truncate=False)

Output

+----------+---------+------+-------------+---+----------+----+

|First Name|Last Name|Gender|Country |Age|Date |Id |

+----------+---------+------+-------------+---+----------+----+

|Dulce |Abril |Female|0 |32 |2017-10-15|1562|

|Mara |0 |Female|Great Britain|0 |2016-08-16|1582|

|Philip |Gent |Male |0 |36 |2015-05-21|2587|

|Kathleen |Hanner |Female|0 |25 |0 |1876|

|Vishvajit |Rao |0 |India |0 |2023-04-24|1230|

|Harry |0 |Male |India |27 |2024-05-23|5664|

|Pankaj |0 |Male |0 |30 |2019-09-23|434 |

|Harshita |Kumari |0 |USA |24 |2018-06-20|352 |

+----------+---------+------+-------------+---+----------+----+Example: Fill null values with string in PySpark DataFrame using fill() method

from pyspark.sql import SparkSession

# creating spark session

spark = SparkSession.builder.appName("testing").getOrCreate()

# creating PySpark DataFrame

dataframe = spark.read.option('header', 'true').csv('sample_data.csv')

# replace all the null values with empty string

dataframe = dataframe.na.fill('N/A')

# displaying

dataframe.show(truncate=False)

Output

+----------+---------+------+-------------+---+----------+----+

|First Name|Last Name|Gender|Country |Age|Date |Id |

+----------+---------+------+-------------+---+----------+----+

|Dulce |Abril |Female|N/A |32 |2017-10-15|1562|

|Mara |N/A |Female|Great Britain|N/A|2016-08-16|1582|

|Philip |Gent |Male |N/A |36 |2015-05-21|2587|

|Kathleen |Hanner |Female|N/A |25 |N/A |1876|

|Vishvajit |Rao |N/A |India |N/A|2023-04-24|1230|

|Harry |N/A |Male |India |27 |2024-05-23|5664|

|Pankaj |N/A |Male |N/A |30 |2019-09-23|434 |

|Harshita |Kumari |N/A |USA |24 |2018-06-20|352 |

+----------+---------+------+-------------+---+----------+----+PySpark Other Tutorials

- How to convert PySpark Row To Dictionary

- PySpark Column Class with Examples

- PySpark Sort Function with Examples

- How to read CSV files using PySpark

- PySpark col() Function with Examples

- Convert PySpark DataFrame Column to List

- How to Write PySpark DataFrame to CSV

- How to Convert PySpark DataFrame to JSON

- How to Apply groupBy in Pyspark DataFrame

- Merge Two DataFrames in PySpark with the Same Column Names

- How to Count Null and NaN Values in Each Column in PySpark DataFrame?

Conclusion

As you have seen, how we have filled the null values in PySpark DataFame along with examples.PySpark DataFrame fillna() and fill() methods are the most useful method to fill the null values with string, float, any literal, etc.

If you found this article helpful, Please share and keep visiting for further PySpark interesting tutorials.