Hi Folks, In this article, you will learn how to convert PySpark DataFrame to JSON ( JavaScript Object Notation ). There are multiple ways that exist in the spark in order to write data frames to JSON, We will see all of them one by one with the help of examples.

Headings of Contents

What is DataFrame in Spark?

A data frame is distributed collection of data organized into named columns. It is similar to the Table ( Rows and Columns ) in SQL or spreadsheet. DataFrame can be used to store and manipulate data in tabular format and we can also perform all SQL operations on top of PySpark or Spark DataFrame in a distributed environment.

You can see the below table which is a representation of DataFrame.

+----------+----------+-----------------+----------+------+

|first_name| last_name| designation|department|salary|

+----------+----------+-----------------+----------+------+

| Pankaj| Kumar| Developer| IT| 33000|

| Hari| Sharma| Developer| IT| 40000|

| Anshika| Kumari| HR Executive| HR| 25000|

| Shantanu| Saini| Manual Tester| IT| 25000|

| Avantika|Srivastava|Senior HR Manager| HR| 45000|

| Jay| Kumar|Junior Accountant| Account| 23000|

| Vinay| Singh|Senior Accountant| Account| 40000|

+----------+----------+-----------------+----------+------+What is JSON?

JSON stands for ( JavaScript Object Notation ). It is a kind of data structure that stores order collection of data. JSON is mostly used to transmit data between clients or web applications and servers. It is similar to a JavaScript array that’s it is called JSON ( JavaScript Object Notation ).

Ways to write PySpark DataFrame to JSON:

There are three ways to write PySpark DataFrame to JSON ( JavaScript Object Notation ).

- Using the toJSON() Method

- Using the toPandas() Method

- Using write.json() method

Before converting DataFrame to JSON we must have PySpark DataFrame so let’s see how can we create PySpark DataFrame.

Creating PySpark DataFrame

To create PySpark DataFrame, we have to import SparkSession class from the pyspark.sql module. The SparkSession class has an attribute called builder that is an instance of the PySpark Builder class. The builder attribute is used to create a spark session.

The Spark session is an entry point of any spark application that is used to create spark DataFrame and RDD ( Resilient Distributed Dataset ), performing operations on top of PySpark DataFrame.

from pyspark.sql import SparkSession

data = [

("Pankaj", "Kumar", "Developer", "IT", 33000),

("Hari", "Sharma", "Developer", "IT", 40000),

("Anshika", "Kumari", "HR Executive", "HR", 25000),

("Shantanu", "Saini", "Manual Tester", "IT", 25000),

("Avantika", "Srivastava", "Senior HR Manager", "HR", 45000),

("Jay", "Kumar", "Junior Accountant", "Account", 23000),

("Vinay", "Singh", "Senior Accountant", "Account", 40000),

]

columns = ["first_name", "last_name", "designation", "department", "salary"]

# creating spark session

spark = SparkSession.builder.appName("testing").getOrCreate()

# creating dataframe

df = spark.createDataFrame(data, columns)

# displaying dataframe

df.show(truncate=True)

After executing the above code, The created data frame will look like this.

+----------+----------+-----------------+----------+------+

|first_name| last_name| designation|department|salary|

+----------+----------+-----------------+----------+------+

| Pankaj| Kumar| Developer| IT| 33000|

| Hari| Sharma| Developer| IT| 40000|

| Anshika| Kumari| HR Executive| HR| 25000|

| Shantanu| Saini| Manual Tester| IT| 25000|

| Avantika|Srivastava|Senior HR Manager| HR| 45000|

| Jay| Kumar|Junior Accountant| Account| 23000|

| Vinay| Singh|Senior Accountant| Account| 40000|

+----------+----------+-----------------+----------+------+Now let’s see all the possible ways to save PySpark DataFrame to JSON.

Write PySpark DataFrame to JSON

We already know that there are three ways to save DataFrame to JSON in PySpark. let’s explore all of them.

Convert PySpark DataFrame to JSON using DataFrame toJSON() method

The PySpark DataFrame toJSON() is a PySpark DataFrame method. Each DataFrame in PySpark represents the instance of the PySpark DataFrame class. The toJSON() method converts each row of the PySpark DataFrame to RDD of string.

from pyspark.sql import SparkSession

data = [

("Pankaj", "Kumar", "Developer", "IT", 33000),

("Hari", "Sharma", "Developer", "IT", 40000),

("Anshika", "Kumari", "HR Executive", "HR", 25000),

("Shantanu", "Saini", "Manual Tester", "IT", 25000),

("Avantika", "Srivastava", "Senior HR Manager", "HR", 45000),

("Jay", "Kumar", "Junior Accountant", "Account", 23000),

("Vinay", "Singh", "Senior Accountant", "Account", 40000),

]

columns = ["first_name", "last_name", "designation", "department", "salary"]

# creating spark session

spark = SparkSession.builder.appName("testing").getOrCreate()

# creating dataframe

df = spark.createDataFrame(data, columns)

# converting PySpark DataFrame to JSON

json_data = df.toJSON().collect()

print(json_data)

Output:

['{"first_name":"Pankaj","last_name":"Kumar","designation":"Developer","department":"IT","salary":33000}',

'{"first_name":"Hari","last_name":"Sharma","designation":"Developer","department":"IT","salary":40000}',

'{"first_name":"Anshika","last_name":"Kumari","designation":"HR '

'Executive","department":"HR","salary":25000}',

'{"first_name":"Shantanu","last_name":"Saini","designation":"Manual '

'Tester","department":"IT","salary":25000}',

'{"first_name":"Avantika","last_name":"Srivastava","designation":"Senior HR '

'Manager","department":"HR","salary":45000}',

'{"first_name":"Jay","last_name":"Kumar","designation":"Junior '

'Accountant","department":"Account","salary":23000}',

'{"first_name":"Vinay","last_name":"Singh","designation":"Senior '

'Accountant","department":"Account","salary":40000}']Convert PySpark DataFrame to JSON using toPandas() Method

The PySpark DataFrame toPandas() method is also a method of PySpark DataFrame and it converts the PySpark DataFrame to Pandas DataFrame.

It does not accept any parameter. This method is only available if Pandas is installed and available.

from pyspark.sql import SparkSession

data = [

("Pankaj", "Kumar", "Developer", "IT", 33000),

("Hari", "Sharma", "Developer", "IT", 40000),

("Anshika", "Kumari", "HR Executive", "HR", 25000),

("Shantanu", "Saini", "Manual Tester", "IT", 25000),

("Avantika", "Srivastava", "Senior HR Manager", "HR", 45000),

("Jay", "Kumar", "Junior Accountant", "Account", 23000),

("Vinay", "Singh", "Senior Accountant", "Account", 40000),

]

columns = ["first_name", "last_name", "designation", "department", "salary"]

# creating spark session

spark = SparkSession.builder.appName("testing").getOrCreate()

# creating dataframe

df = spark.createDataFrame(data, columns)

# convert pyspark dataframe to pandas dataframe

pandas_df = df.toPandas()

# convert pandas df to json

json_data = pandas_df.to_json(orient='records')

print(json_data)

Output

[{"first_name":"Pankaj","last_name":"Kumar","designation":"Developer","department":"IT","salary":33000},{"first_name":"Hari","last_name":"Sharma","designation":"Developer","department":"IT","salary":40000},{"first_name":"Anshika","last_name":"Kumari","designation":"HR Executive","department":"HR","salary":25000},{"first_name":"Shantanu","last_name":"Saini","designation":"Manual Tester","department":"IT","salary":25000},{"first_name":"Avantika","last_name":"Srivastava","designation":"Senior HR Manager","department":"HR","salary":45000},{"first_name":"Jay","last_name":"Kumar","designation":"Junior Accountant","department":"Account","salary":23000},{"first_name":"Vinay","last_name":"Singh","designation":"Senior Accountant","department":"Account","salary":40000}]Convert PySpark DataFrame to JSON using write.json() Method

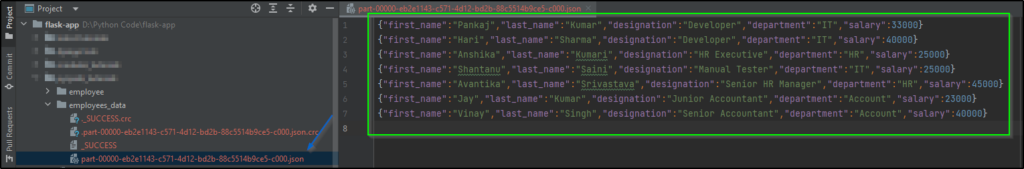

The write is a property of PySpark DataFrame which return the instance of the PySpark DataFrameWriter class. The write.json() will create a directory with the same name that passed inside it and that directory will contain multiple .json files with a set of JSON data.

If you want to write PySpark DataFrame into a single JSON file then you have to use coalesce() method. The coalesce() method takes a parameter that represents the number of partitions and it merges all the JSON files into one.

from pyspark.sql import SparkSession

data = [

("Pankaj", "Kumar", "Developer", "IT", 33000),

("Hari", "Sharma", "Developer", "IT", 40000),

("Anshika", "Kumari", "HR Executive", "HR", 25000),

("Shantanu", "Saini", "Manual Tester", "IT", 25000),

("Avantika", "Srivastava", "Senior HR Manager", "HR", 45000),

("Jay", "Kumar", "Junior Accountant", "Account", 23000),

("Vinay", "Singh", "Senior Accountant", "Account", 40000),

]

columns = ["first_name", "last_name", "designation", "department", "salary"]

# creating spark session

spark = SparkSession.builder.appName("testing").getOrCreate()

# creating dataframe

df = spark.createDataFrame(data, columns)

# write PySpark DataFrame to single json file

df.coalesce(1).write.json("employees_data")

After completing the execution of the above code, A new directory with the name employee_data will be created and inside that directory, a .json file will be created. As you can see below screenshot.

Complete Source Code

You can find the complete source code here.

from pyspark.sql import SparkSession

data = [

("Pankaj", "Kumar", "Developer", "IT", 33000),

("Hari", "Sharma", "Developer", "IT", 40000),

("Anshika", "Kumari", "HR Executive", "HR", 25000),

("Shantanu", "Saini", "Manual Tester", "IT", 25000),

("Avantika", "Srivastava", "Senior HR Manager", "HR", 45000),

("Jay", "Kumar", "Junior Accountant", "Account", 23000),

("Vinay", "Singh", "Senior Accountant", "Account", 40000),

]

columns = ["first_name", "last_name", "designation", "department", "salary"]

# creating spark session

spark = SparkSession.builder.appName("testing").getOrCreate()

# creating dataframe

df = spark.createDataFrame(data, columns)

# converting PySpark DataFrame to JSON using toJSON()

json_data = df.toJSON().collect()

print(json_data)

# convert pyspark dataframe to pandas dataframe and convert it into JSON

pandas_df = df.toPandas()

json_data = pandas_df.to_json(orient='records')

print(json_data)

# write PySpark DataFrame to single json file

df.coalesce(1).write.json("employees_data")

Related PySpark Tutorials:

- How to convert PySpark Row To Dictionary

- PySpark Column Class with Examples

- PySpark Sort Function with Examples

- How to read CSV files using PySpark

- PySpark col() Function with Examples

- Convert PySpark DataFrame Column to List

- How to Write PySpark DataFrame to CSV

Conclusion

I hope the process of writing PySpark DataFrame to JSON was easy and straightforward. As PySpark Developer, Data Engineers, Data Analysts, etc we should have knowledge about this because in real-life projects sometimes we have to deal with JSON data, In that situation, we can use any one of them in order to convert PySpark DataFrame to JSON document.

If you like this article, please share and keep visiting for further PySpark tutorials.

Thanks for taking the time to read this article.

Have a nice day…